The last session of this three-week workshop discussed how we assess risk and test our assumptions.

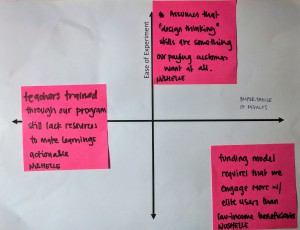

For the first part of the class, we wrote down three key risks on stickies and assigned them to a box on the Lean Canvas – mine were around whether our paying customers were interested in design thinking methods at all, whether our funding model would require us to spend the bulk of our time catering to our paying customers rather than our low-income beneficiaries, and (if we focus on teacher training over student engagement) whether a lack of resources would prevent teachers from being able to implement what they learned.

Reading through the notes articulated by others in our class made it clear that the most well-articulated risks are those that have an implicit benchmark and are measurable. Most risks lie in the solution/unique value proposition categories, followed by revenue streams and channels.

Experimental design

Running an experiment to test our assumptions requires the following components:

- Hypothesis: the key assumption to test

- Subjects: who you will test the assumption with, how they will be recruited and by when

- Interventions: how you’ll test the assumption

- Data: what data will be collected and how it will help formulate a new hypothesis if they invalidate the original hypothesis

- Decision rule: what type of response will be considered as validating the hypothesis and what types of responses will be considered as invalidating

Here are the experiments I came up with:

Experiment #1: Will they pay for it?

Test subjects: 10 businesses/organizations I reach out to as a IEP GPI Ambassador, to chat about the links between creating innovative work environments and positive peace-building

Interventions: At the end of the presentation, I’ll offer to run a short (paid) workshop in the future, demonstrating the skills Building Bridges teaches and how they are applicable in multiple contexts; funds will go to BB operations

Data: If they agree, all good. If they offer other types of benefits in return – e.g. use of their spaces for BB, it indicates some support but not the kind I expected. If they decline, oh well.

Decision rule: If we get 3/10 firms on board, I think that’s a hugely promising start to some potential strong partnerships.

Experiment #2: Will we be able to prioritize our key activities with this funding model?

Test subjects: Same as above.

Interventions: Same as above.

Data: I’ll offer the workshops in different ways – pitch it as a potential partnership, as a CSR project, as a one-time employee benefit to different companies – and see what elicits the highest willingness to partner with us.

Decision rule: If companies are willing to offer, for a 2-hour taster session, an amount that corresponds to 20% of our regular workshop series costs, I take that as a promising sign of WTP for a more detailed 2-3 day corporate workshop covering all workshop costs, that still only requires <20% of the time I would put in to the regular BB sessions. It may turn out differently, though, and they may want to partner in other ways, so I’d have to re-think that funding model.

Experiment #3: Will teachers use what they learn?

Test subjects: Teachers at St. John’s MMV.

Interventions: During the summer workshop series [for kids], I’ll facilitate teacher participation by (1) inviting them to an exhibition, (2) offering interested teachers materials (activity guide of easy challenges + worksheets) to adapt some of the activities for their own classes.

Data: If teachers express interest, it indicates that they might enjoy a workshop tailored for them. If they agree to taking the materials/ask questions, that indicates further buy-in. If they share stories of actually completing the challenges with their classes, then it’s possible to implement with few resources.

Decision rule: I’ll try to distribute materials to 5 interested teachers. If 2 follow through, I’d consider that a success.

Which assumptions to test first?

Interestingly, I thought we should have done this exercise before designing the experiment. We took our three risk-stickies and tried to gauge an order of importance of results from the experiments. In my case, it ended up being funding model > selling design thinking > teacher implementation of trainings.

Interestingly, I thought we should have done this exercise before designing the experiment. We took our three risk-stickies and tried to gauge an order of importance of results from the experiments. In my case, it ended up being funding model > selling design thinking > teacher implementation of trainings.

Then, we tried to gauge how easy it would be to design an experiment. Selling design thinking was, in my case, the easiest one, and the graph looked like this.

It looks like Experiment #1 is the easiest. I’m not sure if it’s worth it to build Expt #2 into it, and still run #3 in the background since I’ll be working at St. John’s this summer. I think the plan should be to keep it simple, and play it by ear.